Ancient Greek graph-based syntactic embeddings

Questions

Since at least Levy & Goldberg's (2014) Dependency-Based Word Embeddings, it has been noted how incorporating syntactic information in the training of word embeddings can change the type of linguistic relations encoded by the resulting representations. Experiments on Ancient Greek vector space modelling (e.g. Rodda et al. 2019) have not yet exploited the relative wealth of synctactically annotated data available for it (compared to most other historical languages). The questions are therefore:- Can we use dependency information in Ancient Greek treebanks to train decent-quality syntactic word embeddings?

- Would the trained spaces reflect a different kind of relation between words than the ones reflected in classic word embeddings?

Challenge

- Ancient Greek, as an historical language, shows much more linguistic variation than English, on which most syntactic-embedding experiments are based. This may be a problem when it comes to token frequency.

- Ancient Greek treebanks not only follow different annotation schemes, but, since there is no native speaker of it, their annotation is very subjective, so that the parsing of a sentence could differ widely from annotator to annotator.

Syntactic embedding architecture

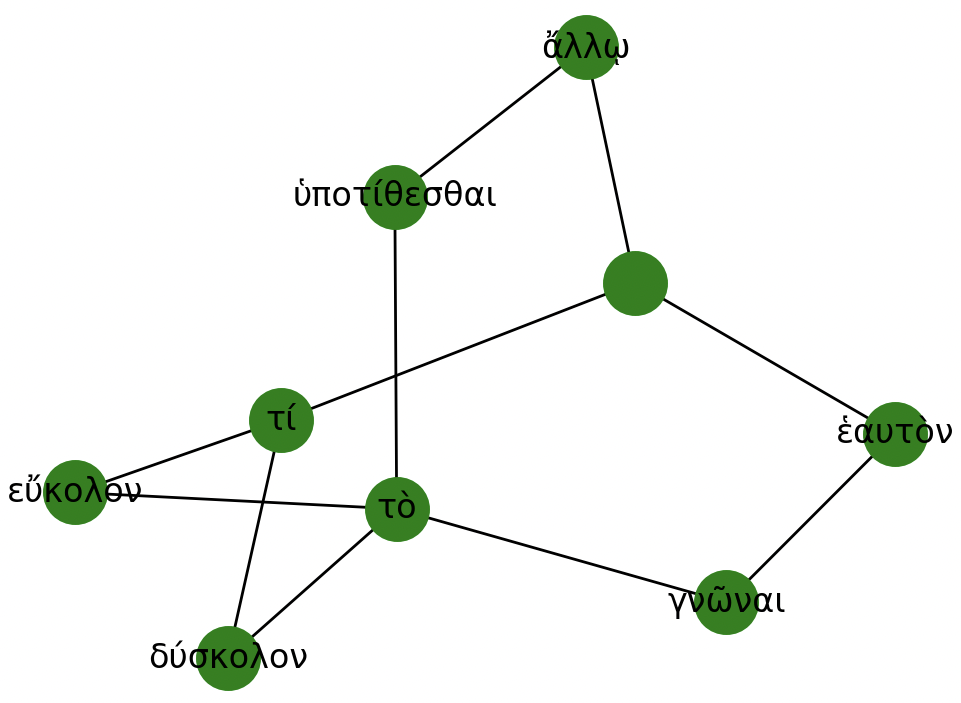

The framework I used is roughly the one described in Al-Ghezi & Kurimo's (2020) Graph-based Syntactic Word Embeddings. You can read more about this architecture here, but in sum, given a series of consituency parse tree in parenthetical form (each corresponding to a sentence in a treebank), we generate a supergraph consisting of the union of all graph-converted trees. The supergraph is then given as input to node2vec, which trains word vectors for the nodes in the graph. For example, given the following two sentences:

Τί δύσκολον; Τὸ ἑαυτὸν γνῶναι.

and

'What is hard? To know yourself.'τί εὔκολον; Τὸ ἄλλῳ ὑποτίθεσθαι.

'What is easy? To advise another.'The supergraph method would give the following as input to node2vec:

Data

Five sources have been used to train the models, all providing the treebanks in XML format. The following four, in the AGDT annotation scheme:- Gorman Treebanks

- PapyGreek Treebanks

- Pedalion Treebanks

- The Ancient Greek Dependency Treebank (PerseusDL)

Preprocessing

The dependency information (head-dependents) in each of the treebank files is first used to generate parenthetical trees, with bespoke methods for the PROIEL and the AGDT schemes. Stopwords are removed and lemmata are use rather than token forms. The resulting trees are then processed following the supergraph framework to create one large graph-based structure. The preprocessing scripts can be found here.Training

node2vec is then used to train word embeddings from the large graph-based structure. Different parameters should be tried as is good practice, but the snippet below is created by training a model using a window of 5 and a min_count of 1.Results

Below is an example comparing the 5-nearest neighbours of ἐλεύθερος, ‘free/freedom’, from the syntactic embedding model and from a count-based model (with PPMI). We can see our count-based model returned all words that are in the semantic field of 'free/freedom' or in its topical scope. On the other hand, although the neighbours from the syntactic model can also be considered to be broadly within the semantic field of 'free/freedom', their relation is more functional than topical, as they’re all adjectives which we can be expected to be found in similar distributions as ἐλεύθερος.5-nearest neighbours for ἐλεύθερος ‘free, freedom’

Traditional count-based model Syntactic embeddings δοῦλος αἰσχρός ἐλευθερία λωίων δουλεύω εὐσχήμων πολίτης εὐτελής δεσπότης πλούσιος Credits

The neighbours from the count-based model shown in the table above were extracted by Silvia Stopponi from a model she trained. The method presented here and other results from this experiment were presented in more details at the International Conference of Historical Linguistics (ICHL25, August 2022, Oxford, United Kingdom), in a paper titled Evaluating Language Models for Ancient Greek: Design, Challenges, and Future Directions (by myself and Barbara McGillivray, Silvia Stopponi, Malvina Nissim & Saskia Peels-Matthey).References

M. Rodda, P. Probert, & B. McGillivray, B. 2019. Vector space models of Ancient Greek word meaning, and a case study on Homer. Traitement Automatique Des Langues, 60(3), 63–87.

R. Al-Ghezi and M. Kurimo. 2020. Graph-based Syntactic Word Embeddings. In Proceedings of the Graph-based Methods for Natural Language Processing (TextGraphs), pages 72–78, Barcelona, Spain (Online). Association for Computational Linguistics.

O. Levy and Y. Goldberg. 2014. Dependency-Based Word Embeddings. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pages 302–308, Baltimore, Maryland. Association for Computational Linguistics.

Check out other projects